Meta has unveiled its largest open-source AI model to date, Llama 3.1 405B, which boasts 405 billion parameters. These parameters are indicative of the model’s problem-solving capabilities, with a higher number generally leading to better performance.

Although Llama 3.1 405B isn’t the largest open-source model ever, it is the most substantial one released in recent years. It was trained using 16,000 Nvidia H100 GPUs and incorporates advanced training and development techniques. Meta asserts that these enhancements make it competitive with top proprietary models like OpenAI’s GPT-4o and Anthropic’s Claude 3.5 Sonnet, albeit with some limitations.

Llama 3.1 405B can be downloaded or accessed via cloud platforms such as AWS, Azure, and Google Cloud. Additionally, it is being utilized on WhatsApp and Meta.ai to power a chatbot experience for users in the U.S.

New and improved

Similar to other generative AI models, both open- and closed-source, Llama 3.1 405B is capable of performing a variety of tasks. These include coding, solving basic math problems, and summarizing documents in eight languages (English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai). However, it is limited to text-based tasks and cannot, for instance, answer questions about images. It excels in handling text-based workloads, such as analyzing PDFs and spreadsheets.

Meta has announced its ongoing experiments with multimodal capabilities. According to a paper published today, the company is actively developing Llama models that can recognize images and videos, as well as understand and generate speech. These models, however, are not yet ready for public release.

To train Llama 3.1 405B, Meta utilized a dataset of 15 trillion tokens up to the year 2024. Tokens are parts of words that models can process more easily than whole words, and 15 trillion tokens equate to approximately 750 billion words. Although this isn’t an entirely new training set, as it was used for earlier Llama models, Meta claims to have refined its data curation pipelines and adopted more stringent quality assurance and data filtering methods for this model.

The company also utilized synthetic data, generated by other AI models, to fine-tune Llama 3.1 405B. While major AI vendors like OpenAI and Anthropic are exploring the use of synthetic data to enhance their AI training, some experts caution that it should be a last resort due to the risk of increasing model bias.

Meta, on its part, claims to have “carefully balanced” the training data for Llama 3.1 405B but did not disclose the exact sources of this data, aside from mentioning webpages and public web files. Many generative AI vendors consider their training data a competitive edge and thus keep details about it confidential. Additionally, revealing specifics about training data can lead to IP-related lawsuits, further discouraging companies from sharing this information.

In the mentioned paper, Meta researchers highlighted that Llama 3.1 405B was trained with a greater proportion of non-English data to enhance its performance in various languages. Additionally, it included more mathematical data and code to improve its mathematical reasoning, as well as recent web data to update its knowledge of current events.

A recent Reuters report disclosed that Meta had used copyrighted e-books for AI training despite warnings from its legal team. The company also trains its AI on Instagram and Facebook posts, photos, and captions, making it challenging for users to opt out. Furthermore, Meta and OpenAI are currently facing a lawsuit from authors, including comedian Sarah Silverman, over the alleged unauthorized use of copyrighted material for model training.

Ragavan Srinivasan, Meta’s VP of AI program management, told TechCrunch, “The training data is like the secret recipe and sauce that goes into building these models. We’ve invested heavily in this and will continue to refine it.”

Bigger context and tools

Llama 3.1 405B features a significantly larger context window than its predecessors, accommodating 128,000 tokens, which is approximately the length of a 50-page book. The context window of a model refers to the amount of input data (such as text) it can consider before generating output.

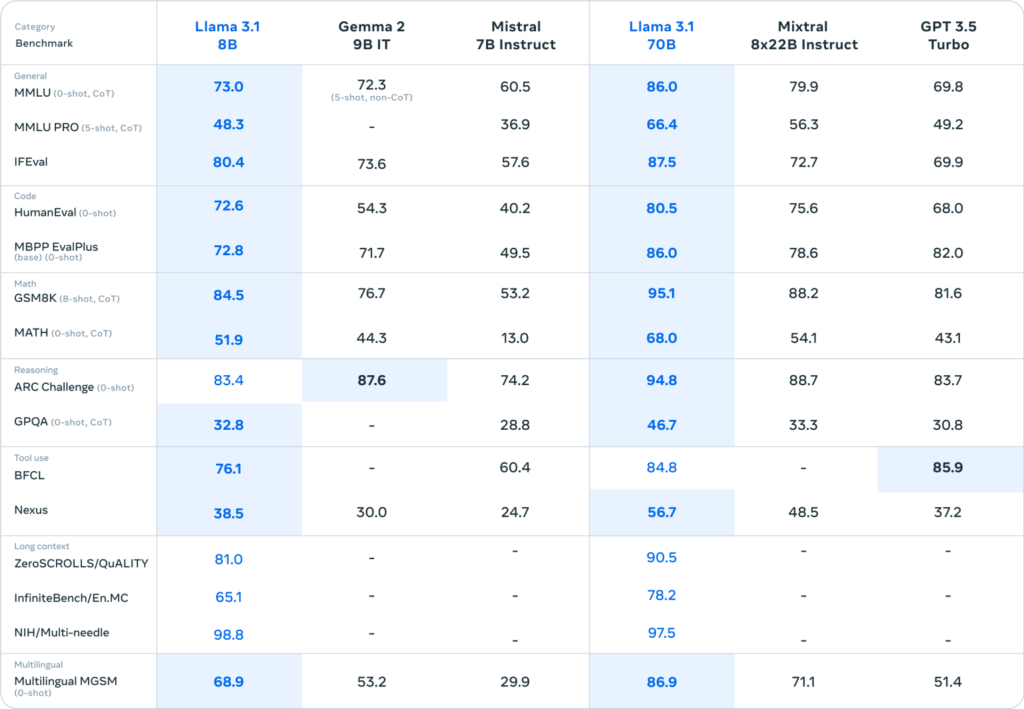

Models with larger context windows can summarize longer text passages and files more effectively. When used in chatbots, these models are also less likely to lose track of recent topics in the conversation.Meta also introduced two other new, smaller models today: Llama 3.1 8B and Llama 3.1 70B. These are updated versions of the Llama 3 8B and Llama 3 70B models released in April, and they also feature 128,000-token context windows. This is a significant upgrade from the previous models, which had context windows limited to 8,000 tokens, assuming the new models can effectively utilize the expanded context.

All Llama 3.1 models are capable of utilizing third-party tools, apps, and APIs to perform various tasks, similar to models from Anthropic and OpenAI. By default, they are trained to use Brave Search for answering questions about recent events, the Wolfram Alpha API for math and science queries, and a Python interpreter for code validation. Additionally, Meta asserts that the Llama 3.1 models can also use some tools they haven’t previously encountered, to a certain degree.

Building an ecosystem

If benchmarks are to be trusted (though they aren’t the definitive measure in generative AI), Llama 3.1 405B is a highly capable model. This is a positive development, given the noticeable limitations of earlier Llama models.

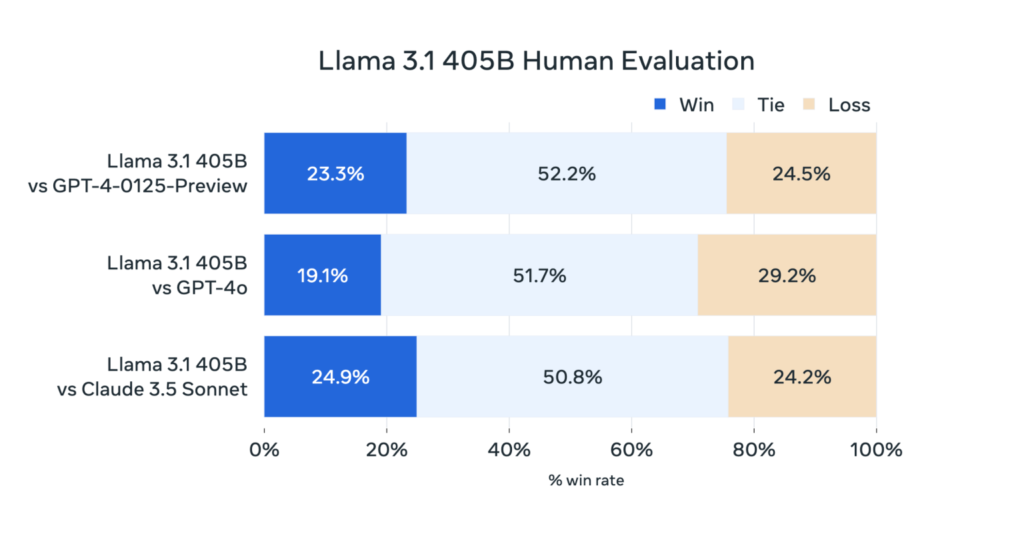

According to human evaluators hired by Meta, Llama 3.1 405B performs comparably to OpenAI’s GPT-4 and shows “mixed results” when compared to GPT-4o and Claude 3.5 Sonnet. While it excels in executing code and generating plots better than GPT-4o, its multilingual abilities are generally weaker, and it lags behind Claude 3.5 Sonnet in programming and general reasoning.

Due to its size, Llama 3.1 405B requires robust hardware, with Meta recommending at least a server node.

This might explain why Meta is promoting its smaller models, Llama 3.1 8B and Llama 3.1 70B, for general-purpose tasks like powering chatbots and generating code. Meta suggests that Llama 3.1 405B is more suited for model distillation — transferring knowledge from a large model to a smaller, more efficient one — and generating synthetic data for training or fine-tuning other models.

To support the use of synthetic data, Meta has updated Llama’s license to allow developers to use outputs from the Llama 3.1 model family to create third-party AI generative models. However, the license still restricts how developers can deploy Llama models: app developers with over 700 million monthly users must request a special license from Meta, which the company will grant at its discretion.

Meta’s recent licensing change regarding outputs addresses a major criticism from the AI community and is part of the company’s strong push to gain influence in the generative AI space.

In addition to the Llama 3.1 family, Meta is introducing a “reference system” and new safety tools designed to prevent Llama models from responding unpredictably or undesirably. These initiatives aim to encourage developers to integrate Llama into more applications. Meta is also previewing and seeking feedback on the Llama Stack, an upcoming API for tools that can fine-tune Llama models, generate synthetic data, and create “agentic” applications — apps that can act on behalf of users.

“We have consistently heard from developers about their interest in learning how to deploy [Llama models] in production,” said Ragavan Srinivasan, Meta’s VP of AI program management. “So we’re providing them with a variety of tools and options to help them do that.”

Play for market share

In an open letter published this morning, Meta CEO Mark Zuckerberg outlined a vision for the future where AI tools and models are accessible to more developers worldwide, ensuring that people can benefit from AI’s “opportunities and advantages.”

While the letter has a philanthropic tone, it also reveals Zuckerberg’s intention for these tools and models to be developed by Meta.

Meta is striving to catch up with companies like OpenAI and Anthropic by using a familiar strategy: offering tools for free to build an ecosystem and then gradually introducing paid products and services. By investing billions in models that can be commoditized, Meta aims to lower competitors’ prices and widely disseminate its version of AI. This approach also allows Meta to integrate improvements from the open-source community into future models.

Llama has certainly captured developers’ interest. Meta reports that Llama models have been downloaded over 300 million times, and more than 20,000 Llama-derived models have been created.

Meta is serious about its ambitions, spending millions on lobbying regulators to support its preferred version of “open” generative AI. Although the Llama 3.1 models do not resolve all the current issues with generative AI, such as fabricating information and repeating problematic training data, they help Meta move closer to becoming synonymous with generative AI.

There are costs associated with this. In a research paper, co-authors, echoing Zuckerberg’s recent comments, discuss the energy-related challenges of training Meta’s increasingly large generative AI models.

“During training, tens of thousands of GPUs may simultaneously increase or decrease power consumption, for example, due to all GPUs waiting for checkpointing or collective communications to finish, or the startup or shutdown of the entire training job,” they write. “This can cause instant fluctuations in power consumption across the data center, stretching the power grid’s limits. This is an ongoing challenge as we scale training for future, even larger Llama models.”

Hopefully, training these larger models won’t necessitate keeping old coal-burning power plants operational.