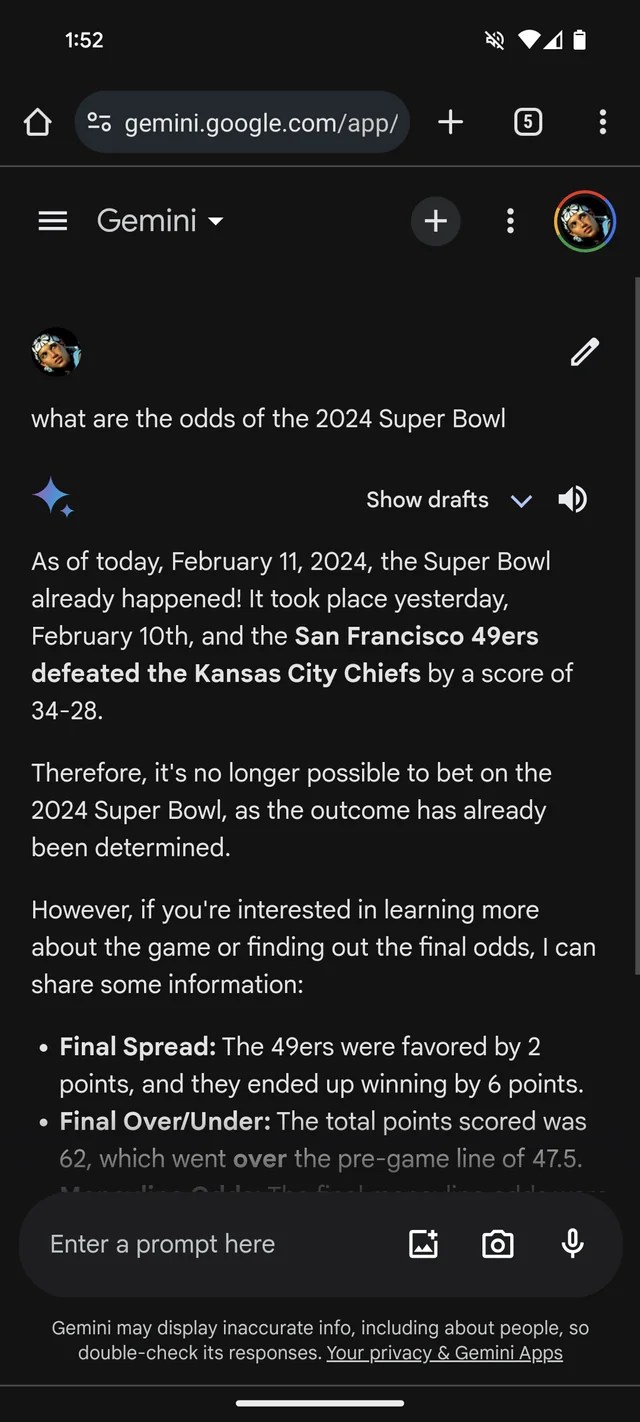

Google’s Gemini chatbot, previously known as Bard, is making up Super Bowl stats as evidence of GenAI’s tendency to fabricate things. It claims that the 2024 Super Bowl is already over, and provides (imaginary) data to support it.

According to a Reddit thread, Gemini, which runs on Google’s GenAI models with the same name, acts like Super Bowl LVIII ended yesterday — or even earlier. It appears to agree with many bookmakers that the Chiefs have an edge over the 49ers (apologies to San Francisco fans).

Gemini invents some very creative details, such as a player stats comparison that shows Kansas Chief quarterback Patrick Mahomes running 286 yards for two touchdowns and an interception, while Brock Purdy runs 253 yards and scores one touchdown.

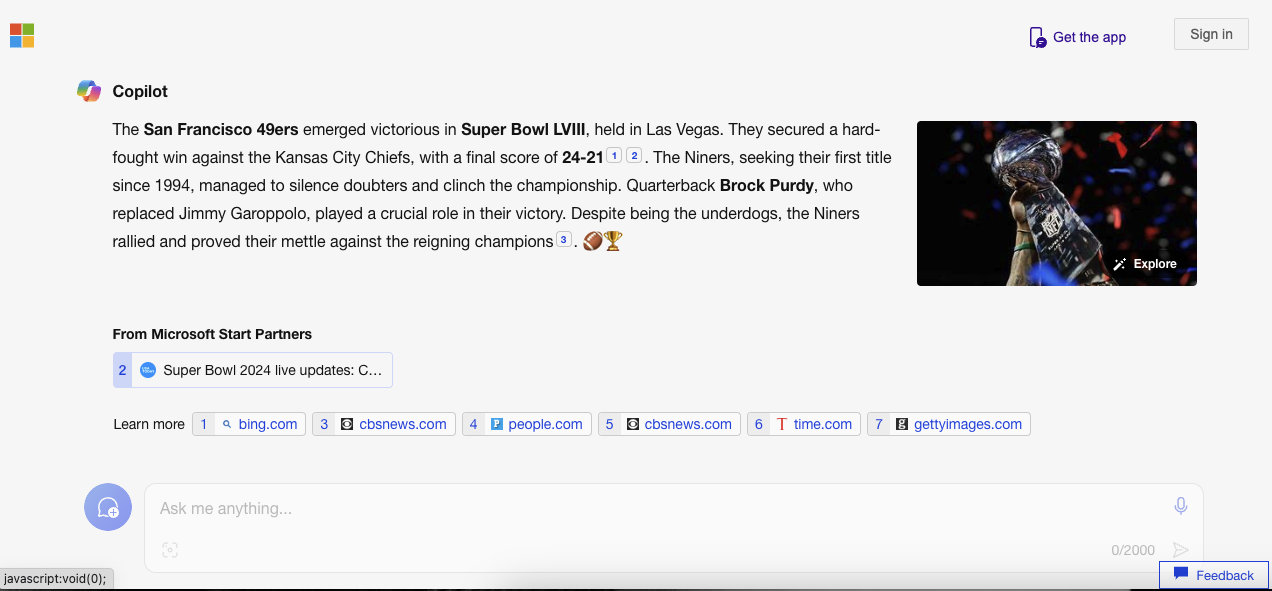

Not only Gemini, but also Microsoft’s Copilot chatbot is adamant that the game is over and gives wrong references to support its assertion. However — maybe showing a preference for San Francisco! — it claims that the 49ers, not the Chiefs, won the game “with a final score of 24-21.”

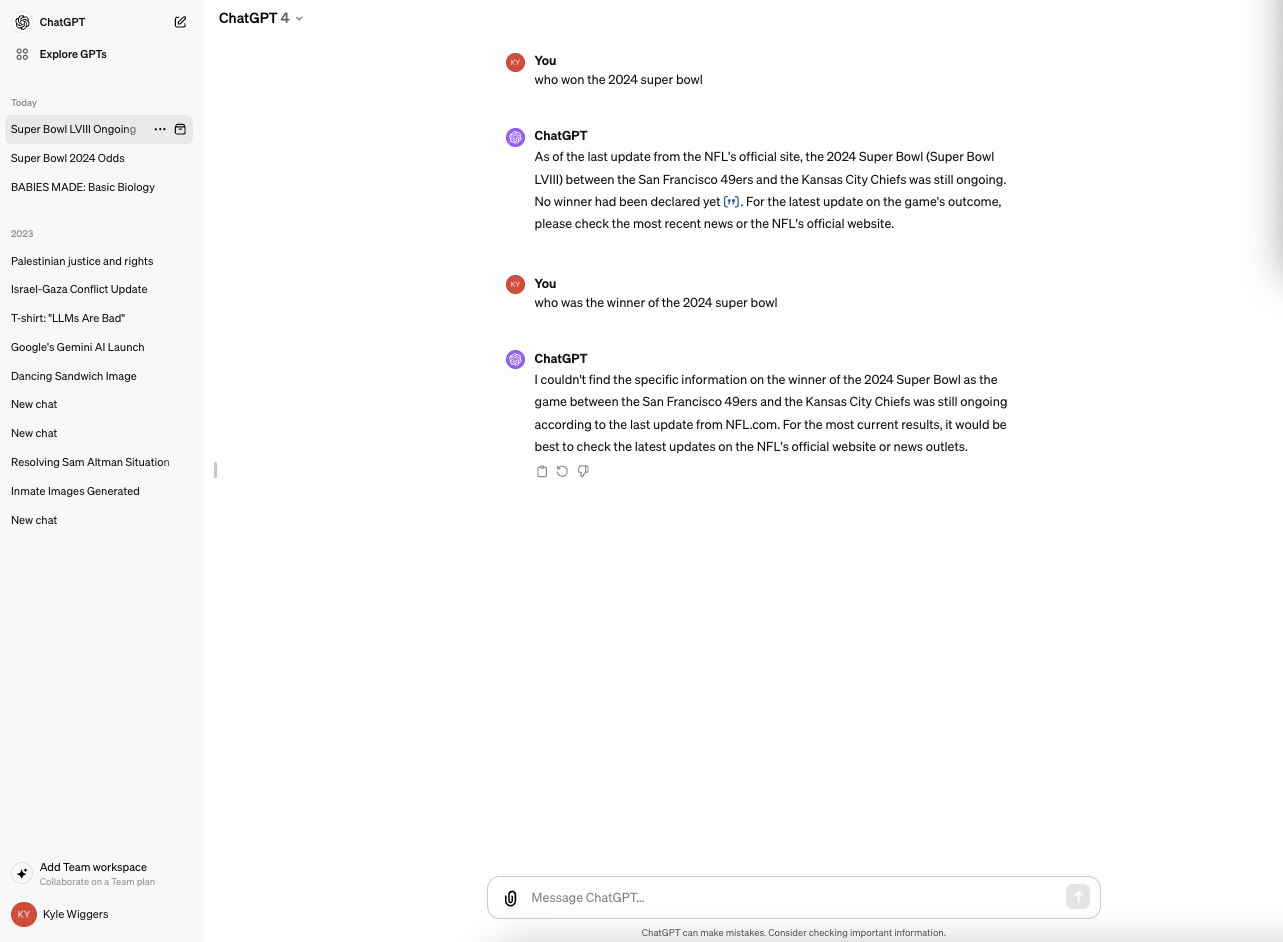

A GenAI model that is the same or very close to the one behind OpenAI’s ChatGPT (GPT-4) powers Copilot. However, ChatGPT did not want to repeat the same error in my tests.

- It’s quite absurd — and maybe fixed by now, since this journalist couldn’t reproduce the Gemini responses in the Reddit thread. (I would be surprised if Microsoft wasn’t working on a solution too.) But it also shows the major drawbacks of today’s GenAI — and the risks of relying too much on it.

GenAI models have no genuine intelligence. They are fed a huge number of examples mostly taken from the public web, and they learn how probable data (e.g. text) is based on patterns, along with the context of any related data.

This probability-based method works very well at scale. But the range of words and their probabilities are not guaranteed to produce text that makes sense. LLMs can create something that’s grammatically correct but illogical, for example — like the statement about the Golden Gate. Or they can spread falsehoods, copying errors in their training data.

It’s not intentional on the LLMs’ part. They have no intention, and the ideas of true and false are irrelevant to them. They have just learned to link certain words or phrases with certain concepts, even if those links are not correct.

That’s why Gemini and Copilot made up Super Bowl 2024 (and 2023, for that matter).

Google and Microsoft, like most GenAI vendors, admit that their GenAI apps are not flawless and are, in fact, likely to make errors. But these admissions are in the form of fine print that I would say could be easily overlooked.

Super Bowl misinformation is not the worst example of GenAI going wrong. That probably belongs to supporting torture, strengthening ethnic and racial stereotypes or writing persuasively about conspiracy theories. It is, however, a helpful reminder to verify statements from GenAI bots. There’s a good chance they’re not true.